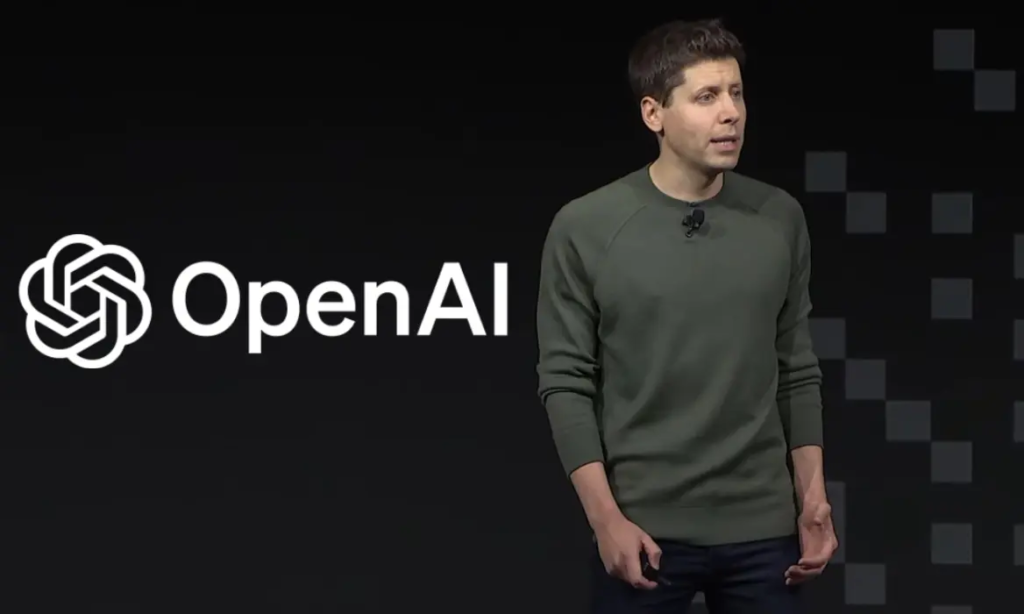

AI News In a move aimed at reinforcing accountability and proactively addressing potential risks

OpenAI has declared that its board will wield the final authority in determining the safety parameters of new AI models. This strategic decision is part of OpenAI’s broader initiative to curb ‘catastrophic risks’ associated with artificial intelligence (AI) systems. Let’s delve into the details of OpenAI’s commitment to prioritizing safety and the implications of granting the board this pivotal role.

The Role of Oversight in AI Safety

OpenAI, at the forefront of AI research and development, recognizes the imperative of establishing robust safety mechanisms in the ever-evolving landscape of artificial intelligence. The announcement underscores OpenAI’s commitment to proactively manage the potential risks associated with AI, reflecting a sense of responsibility towards the broader societal implications of advanced technology.

OpenAI’s Preparedness Framework is designed to act as a stringent safety net, mitigating risks ranging from cybersecurity disruptions to the inadvertent involvement of AI models in the creation of hazardous materials such as biological, chemical, or nuclear weapons. The framework emphasizes a multi-pronged approach to closely monitor, evaluate, and prevent potential threats stemming from advanced AI technologies.

One of the key aspects of OpenAI’s strategy involves empowering its oversight board with the ultimate decision-making authority concerning the safety of new AI models. This signifies a deliberate step to ensure an extra layer of scrutiny and expertise in evaluating the potential risks posed by emerging AI technologies. By placing the responsibility squarely in the hands of the board, OpenAI aims to foster transparency and accountability in its pursuit of cutting-edge AI advancements.

OpenAI’s emphasis on mitigating ‘catastrophic risks’ acknowledges the transformative power of AI and the need to preemptively address any unintended consequences that may arise. The term ‘catastrophic risks’ encompasses a spectrum of potential issues, including ethical concerns, biases in AI systems, and the broader impact of AI on society. OpenAI’s commitment to a safety-first approach aligns with the evolving discourse on responsible AI development.

Balancing Innovation and Safety

As AI technologies continue to advance, there exists a delicate balance between fostering innovation and safeguarding against potential risks. OpenAI’s decision to vest the oversight board with the final say on safety reflects a nuanced approach to navigate this balance. The board, composed of experts in various relevant fields, is positioned to provide comprehensive assessments of AI models, ensuring that technological progress aligns with ethical and safety considerations.

Before reaching the stage of board intervention, OpenAI incorporates a series of safety checks within its preparedness framework. A dedicated “preparedness” team, led by Massachusetts Institute of Technology professor Aleksander Madry, evaluates and monitors potential risks. The team synthesizes these risks into scorecards, categorizing them as “low,” “medium,” “high,” or “critical.” Only models with a post-mitigation score of “medium” or below are allowed deployment, and those with a “post-mitigation score of ‘high’ or below” can proceed with further development.

OpenAI’s proactive stance in handing over safety oversight to its board sets a precedent for the broader AI community. It underscores the importance of establishing clear governance structures and ethical frameworks to guide AI development. By championing transparency and accountability, OpenAI aims to influence the industry’s best practices and contribute to the responsible evolution of artificial intelligence.

OpenAI’s recent announcement regarding the board’s final say on the safety of new AI models is a significant milestone in the ongoing discourse on AI ethics and responsibility. As the organization navigates the intricate landscape of technological innovation, its commitment to mitigating ‘catastrophic risks’ demonstrates a proactive and responsible approach. By entrusting the oversight board with this critical role, OpenAI sets a precedent for ethical AI development and contributes to shaping a future where advanced technologies coexist harmoniously with societal well-being.